Member-only story

Talk to My Document: A Serverless RAG approach using Huggingface, Amazon Lex and Amazon DynamoDB

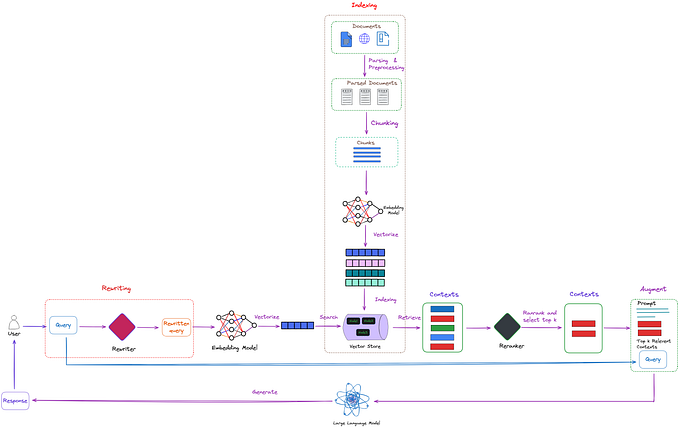

Nowadays RAG has become a very popular approach to develop context aware AI bots. Imagine being able to have a conversation with your documents, asking questions and receiving precise answers instantly. This is the promise of Retrieval-Augmented Generation (RAG). RAG is an approach that combines the best of both worlds: the retrieval power of search engines and the generative capabilities of large language models (LLMs).

If you are stuck behind paywall

In this post, I will show you how to build a RAG-based chatbot using three powerful tools: Huggingface for advanced language models, Amazon Lex for creating conversational agents, and Amazon DynamoDB for scalable and efficient vector storage. The chatbot will be able to answer questions based on the content of your documents, providing you with instant access to the information you need. By the end of this tutorial, you’ll have a comprehensive understanding of how to create a conversational document interface that makes retrieving information as easy as having a chat.